☆

1

Comments:

### Style

I like the Levin style, he writes a bit provocative phrases that looks, in the same time, incredibly truthful (at least for the author himself). Consider for example what he says about the invention of positional numeral systems and Quantum computers:

> Archimedes made a great discovery that digital representation of numbers is exponentially

> more efficient than analog ones (sand pile sizes). Many subsequent analog devices yielded

> unimpressive results. It is not clear why QCs [quantum computers] should be an exception.

### One-way function and the axiom of choice

One-way functions and the axiom of choice deals with practical

or conceptual (im-)possibility to solve [inverse problems](https://en.wikipedia.org/wiki/Inverse_problem) arising in Computer Science and Mathematics.

### Kolmogorov complexity and One-way functions

Two primes $p$ and $q$ have almost the same [informational content](https://en.wikipedia.org/wiki/Algorithmic_information_theory) as their product $pq$.

However, the [$p, q \mapsto pq$](https://en.wikipedia.org/wiki/Computational_complexity_of_mathematical_operations#Arithmetic_functions) is much more easy to do than

[$pq \mapsto p,q$](https://en.wikipedia.org/wiki/Integer_factorization#Prime_decomposition).

☆

1

Comments:

## Interesting paper!

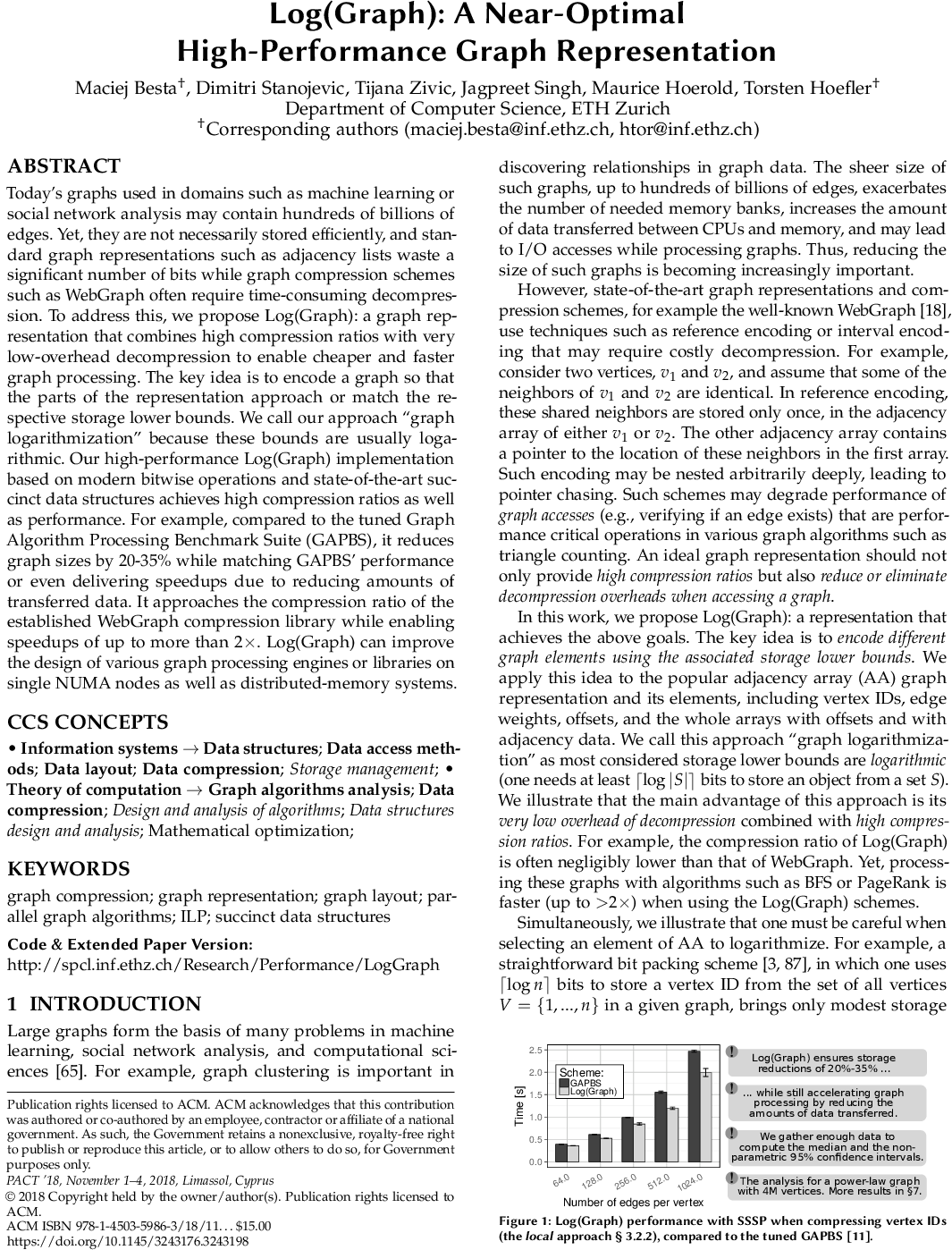

Code is coming soon: http://spcl.inf.ethz.ch/Research/Performance/LogGraph/

The method can reduce the size needed to store the input graph by 35% compared to the adjacency array format, while not making computations slower.

Several compression frameworks are suggested in the paper. All of them use fixed length compression codes contrarily the WebGraph framework.

One of the compression frameworks leads to a space of $\sum_{v\in V} \log(\widehat{N_v})\cdot d_v+\log(\log(\widehat{N_v}))$ bits (cf. section 3.2, $\widehat{N_v}$ is the maximum ID of the neighbors of node $v$) as it uses $\log(\widehat{N_v})$ bits to store the $d_v$ neighbors of each node $v$ and $\log(\log(\widehat{N_v}))$ bits to store the length of the codes used for each node $v$. Note that an additional cost is needed in order to have a direct access to the neighbors of each node (this is done using succinct bit vectors (it seems to lead to a total number of bits close to $n\cdot \log(m)$)). The nodes can be renumbered such that the number of bits is minimized.

### Approaches the compression ratio of WebGraph

I read that the suggested compression method "approaches the compression ratio of the established WebGraph compression library". What does it mean exactly? The Webgraph compression library can compress web graphs using less than 2 bits per link. The suggested compression framework seems to be far from that compression ratio.

So I think that what is meant is that on graphs other than web graphs the suggested method approaches the compression ratio of the Webgraph library. Am I correct?

### Java VS C++

I read "We use the WebGraph original tuned Java implementation for gathering the data on compression ratios but, as Log(Graph) is developed in C++, we use a proof-of-a-concept C++ implementation of WG schemes for a fair C++ based performance analysis.".

I do not understand. This is because the original Java implementation is slower than a C++ implementation? Which C++ implementation was used for WG?

### Cost function in section 3.6

The quantity to minimize should be $\sum_{v\in V} \log(\widehat{N_v})\cdot d_v$ (cf. section 3.2, $\widehat{N_v}$ is the maximum ID of the neighbors of node $v$), that is intuitively minimizing $\widehat{N_v}$ for nodes with high $d_v$.

It is not clear to me why this other counter-intuitive objective is used: $\sum_{v\in V} \widehat{N_v}\cdot \frac{1}{d_v}$.

### Webgraph decompression impacts running time

I agree that referencing nodes can impact the running time (especially if there are chains of references). However, it is possible to use the WebGraph framework without using referencing, that is only using gap encoding with variable length instantaneous codes.

I think that doing that does not impact significantly the running time, does it?

### Typos and minors

- Section 4.2.: "There are exactly $Q$ bit vectors of length with n ones and the storage lower bound is $Q$ bits." -> the storage lower bound is $\log(Q)$ bits.

Some people discuss the paper (and troll the paper authors) on hackernews:

https://news.ycombinator.com/item?id=18081978

Comments: