Wellcome to Maximimi's library,

You can find here all papers liked or uploaded by Maximimitogether with brief user bio and description of her/his academic activity.

[Link to my homepage](https://sites.google.com/view/danisch/home)

## I will read the following papers.

- [Quasi-Succinct Indices](https://papers-gamma.link/paper/130)

- [PageRank as a Function of the Damping Factor](https://papers-gamma.link/paper/106)

- [Graph Stream Algorithms: A Survey](https://papers-gamma.link/paper/102)

- [Network Sampling: From Static to Streaming Graphs](https://papers-gamma.link/paper/122)

- [The Protein-Folding Problem, 50 Years On](https://papers-gamma.link/paper/78)

- [Computational inference of gene regulatory networks: Approaches, limitations and opportunitie](https://papers-gamma.link/paper/77)

- [Graph complexity analysis identifies an ETV5 tumor-specific network in human and murine low-grade glioma](https://papers-gamma.link/paper/79)

- [Gene Networks in Plant Biology: Approaches in Reconstruction and Analysis](https://papers-gamma.link/paper/76)

- [The non-convex Burer–Monteiro approach works on smooth semidefinite programs](https://papers-gamma.link/paper/80)

- [Solving SDPs for synchronization and MaxCut problems via the Grothendieck inequality](https://papers-gamma.link/paper/81)

- [Influence maximization in complex networks through optimal percolation](https://papers-gamma.link/paper/70)

- [Motifs in Temporal Networks](https://papers-gamma.link/paper/61)

- [Deep Sparse Rectifier Neural Networks](https://papers-gamma.link/paper/69)

- [Sparse Convolutional Neural Networks](https://papers-gamma.link/paper/67)

- [A fast and simple algorithm for training neural probabilistic language models](https://papers-gamma.link/paper/58)

- [Adding One Neuron Can Eliminate All Bad Local Minima](https://papers-gamma.link/paper/71)

## I read the following papers.

### 2019-2020

- [Fast and High Quality Multilevel Scheme for Partitioning Irregular Graphs](https://papers-gamma.link/paper/162)

- [Karp-Sipser based kernels for bipartite graph matching](https://papers-gamma.link/paper/160)

- [Speedup Graph Processing by Graph Ordering](https://papers-gamma.link/paper/159)

- [Tree Sampling Divergence: An Information-Theoretic Metric for Hierarchical Graph Clustering](https://papers-gamma.link/paper/154)

### 2018-2019:

- [SWeG: Lossless and Lossy Summarization of Web-Scale Graphs](https://papers-gamma.link/paper/139)

- [Smoothed Analysis: An Attempt to Explain the Behavior of Algorithms in Practice](https://papers-gamma.link/paper/129)

- [Are stable instances easy?](https://papers-gamma.link/paper/128)

- [Hierarchical Taxonomy Aware Network Embedding](https://papers-gamma.link/paper/116)

- [Billion-scale Network Embedding with Iterative Random Projection](https://papers-gamma.link/paper/110)

- [HARP: Hierarchical Representation Learning for Networks](https://papers-gamma.link/paper/109/)

- [Layered Label Propagation: A MultiResolution Coordinate-Free Ordering for Compressing Social Networks](https://papers-gamma.link/paper/105)

### 2017-2018:

- [Link Prediction in Graph Streams](https://papers-gamma.link/paper/101)

- [The Community-search Problem and How to Plan a Successful Cocktail Party](https://papers-gamma.link/paper/74)

- [A Nonlinear Programming Algorithm for Solving Semidefinite Programs via Low-rank Factorization](https://papers-gamma.link/paper/55)

- [Deep Learning](https://papers-gamma.link/paper/68)

- [Reducing the Dimensionality of Data with Neural Networks](https://papers-gamma.link/paper/65)

- [Representation Learning on Graphs: Methods and Applications](https://papers-gamma.link/paper/60)

- [Improved Approximation Algorithms for MAX k-CUT and MAX BISECTION](https://papers-gamma.link/paper/56)

- [Cauchy Graph Embedding](https://papers-gamma.link/paper/53)

- [Phase Transitions in Semidefinite Relaxations](https://papers-gamma.link/paper/57)

- [Graph Embedding Techniques, Applications, and Performance: A Survey](https://papers-gamma.link/paper/52)

- [VERSE: Versatile Graph Embeddings from Similarity Measures](https://papers-gamma.link/paper/48)

- [Hierarchical Clustering Beyond the Worst-Case](https://papers-gamma.link/paper/45)

- [Scalable Motif-aware Graph Clustering](https://papers-gamma.link/paper/18)

- [Practical Algorithms for Linear Boolean-width](https://papers-gamma.link/paper/40)

- [New Perspectives and Methods in Link Prediction](https://papers-gamma.link/paper/28/New%20Perspectives%20and%20Methods%20in%20Link%20Prediction)

- [In-Core Computation of Geometric Centralities with HyperBall: A Hundred Billion Nodes and Beyond](https://papers-gamma.link/paper/37)

- [Diversity is All You Need: Learning Skills without a Reward Function](https://papers-gamma.link/paper/36)

- [When Hashes Met Wedges: A Distributed Algorithm for Finding High Similarity Vectors](https://papers-gamma.link/paper/23)

- [Fast Approximation of Centrality](https://papers-gamma.link/paper/35/Fast%20Approximation%20of%20Centrality)

- [Indexing Public-Private Graphs](https://papers-gamma.link/paper/19/Indexing%20Public-Private%20Graphs)

- [On the uniform generation of random graphs with prescribed degree sequences](https://papers-gamma.link/paper/26/On%20the%20uniform%20generation%20of%20random%20graphs%20with%20prescribed%20d%20egree%20sequences)

- [Linear Additive Markov Processes](https://papers-gamma.link/paper/21/Linear%20Additive%20Markov%20Processes)

- [ESCAPE: Efficiently Counting All 5-Vertex Subgraphs](https://papers-gamma.link/paper/17/ESCAPE:%20Efficiently%20Counting%20All%205-Vertex%20Subgraphs)

- [The k-peak Decomposition: Mapping the Global Structure of Graphs](https://papers-gamma.link/paper/16/The%20k-peak%20Decomposition:%20Mapping%20the%20Global%20Structure%20of%20Graphs)

- [A Fast and Provable Method for Estimating Clique Counts Using Turán’s Theorem](https://papers-gamma.link/paper/24)

☆

1

Comments:

Great paper! A pleasure to read!

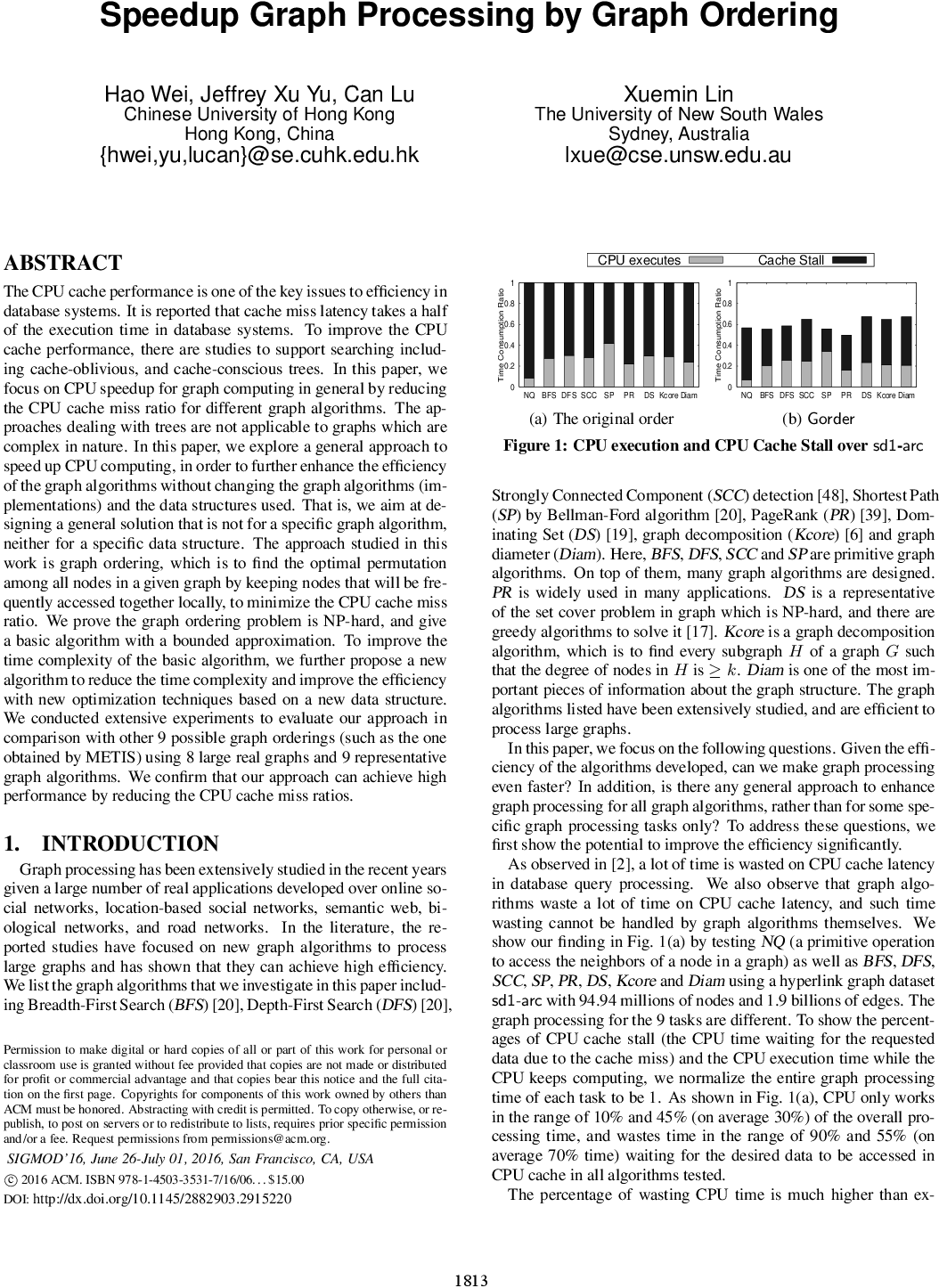

- Using the proposed ordering divides the running time by no more than two compared to using other orderings. This is a bit disappointing.

- Why no comparison against a random permutation of the nodes? I'm curious how bad a random ordering performs.

- "the optimal score $F_w$ cannot be computed due to the NP-hard complexity": such as it is, this statement is not true since only a few real-world datasets are considered. In practice, these real-world datasets could lead to easy instances of the problem.

- Is there any publicly available implementation of the proposed algorithm?

I find the following paragraph confusing:

"In general, the problem of finding the optimal graph ordering by maximizing $F(\phi)$ is the problem of solving maxTSP-$w$ with a window size $w$ as a variant of the maxTSP problem. To solve the graph ordering problem as maxTSP-$w$, we can construct an edge-weighted complete undirected graph $G_w$ from $G$. Here, $V(G_w) =V(G)$, and there is an edge $(v_i, v_j)\in E(G_w)$ for any pair of nodes in $V(G_w)$. The edge-weight for an edge (vi, vj) in $G_w$, denoted as $s_{ij}$, is $S(v_i, v_j)$ computed for the two nodes in the original graph $G$. Then the optimal maxTSP-$w$ over $G$ is the solution of maxTSP over $G$. Note that maxTSP only cares the weight between two adjacent nodes, whereas maxTSP-$w$ cares the sum of weights within a window of size $w$."

I think that:

- The index $w$ of $G_w$ does not refer to the window size $w$, but stands for "weight".

- It should be "the optimal $F(\Phi)$ over $G$ is the solution of maxTSP-$w$ over $G_w$" instead of "the optimal maxTSP-$w$ over $G$ is the solution of maxTSP over $G$".

- On the same line, in the paragraph above that one: "The optimal graph ordering, for the window size $w= 1$, by maximizing $F(\Phi)$ is equivalent to the maximum traveling sales-man problem, denoted as maxTSP for short.". It should be clear that maxTSP should be solve on $G_w$, where $w$ stands for weight: complete graph with weight $S(u,v)$ on the edge $u,v$.

MINORS:

- "The night graph algorithms" and "the night orderings": "night" instead of "nine".

Interesting comments.

> - Using the proposed ordering divides the running time by no more than two compared to using other orderings. This is a bit disappointing.

Yes, but from Figure 1, we can draw that we cannot expect much more than this... Maybe 3 or 4 at most.

> - "the optimal score $F_w$ cannot be computed due to the NP-hard complexity": such as it is, this statement is not true since only a few real-world datasets are considered. In practice, these real-world datasets could lead to easy instances of the problem.

Very relevant, still it looks very much like looking for the best solution of a TSPw on a real instance. I understand that they actually found the optimal $ F_w $ in some cases (Table 1).

Every time I see how people optimize CPU or disk cache usage, I admire it.

It makes me think that optimizing the [speculative execution](https://en.wikipedia.org/wiki/Speculative_execution) and branch prediction could also be used to this end.

Comments: