Wellcome to Maximimi's library,

You can find here all papers liked or uploaded by Maximimitogether with brief user bio and description of her/his academic activity.

[Link to my homepage](https://sites.google.com/view/danisch/home)

## I will read the following papers.

- [Quasi-Succinct Indices](https://papers-gamma.link/paper/130)

- [PageRank as a Function of the Damping Factor](https://papers-gamma.link/paper/106)

- [Graph Stream Algorithms: A Survey](https://papers-gamma.link/paper/102)

- [Network Sampling: From Static to Streaming Graphs](https://papers-gamma.link/paper/122)

- [The Protein-Folding Problem, 50 Years On](https://papers-gamma.link/paper/78)

- [Computational inference of gene regulatory networks: Approaches, limitations and opportunitie](https://papers-gamma.link/paper/77)

- [Graph complexity analysis identifies an ETV5 tumor-specific network in human and murine low-grade glioma](https://papers-gamma.link/paper/79)

- [Gene Networks in Plant Biology: Approaches in Reconstruction and Analysis](https://papers-gamma.link/paper/76)

- [The non-convex Burer–Monteiro approach works on smooth semidefinite programs](https://papers-gamma.link/paper/80)

- [Solving SDPs for synchronization and MaxCut problems via the Grothendieck inequality](https://papers-gamma.link/paper/81)

- [Influence maximization in complex networks through optimal percolation](https://papers-gamma.link/paper/70)

- [Motifs in Temporal Networks](https://papers-gamma.link/paper/61)

- [Deep Sparse Rectifier Neural Networks](https://papers-gamma.link/paper/69)

- [Sparse Convolutional Neural Networks](https://papers-gamma.link/paper/67)

- [A fast and simple algorithm for training neural probabilistic language models](https://papers-gamma.link/paper/58)

- [Adding One Neuron Can Eliminate All Bad Local Minima](https://papers-gamma.link/paper/71)

## I read the following papers.

### 2019-2020

- [Fast and High Quality Multilevel Scheme for Partitioning Irregular Graphs](https://papers-gamma.link/paper/162)

- [Karp-Sipser based kernels for bipartite graph matching](https://papers-gamma.link/paper/160)

- [Speedup Graph Processing by Graph Ordering](https://papers-gamma.link/paper/159)

- [Tree Sampling Divergence: An Information-Theoretic Metric for Hierarchical Graph Clustering](https://papers-gamma.link/paper/154)

### 2018-2019:

- [SWeG: Lossless and Lossy Summarization of Web-Scale Graphs](https://papers-gamma.link/paper/139)

- [Smoothed Analysis: An Attempt to Explain the Behavior of Algorithms in Practice](https://papers-gamma.link/paper/129)

- [Are stable instances easy?](https://papers-gamma.link/paper/128)

- [Hierarchical Taxonomy Aware Network Embedding](https://papers-gamma.link/paper/116)

- [Billion-scale Network Embedding with Iterative Random Projection](https://papers-gamma.link/paper/110)

- [HARP: Hierarchical Representation Learning for Networks](https://papers-gamma.link/paper/109/)

- [Layered Label Propagation: A MultiResolution Coordinate-Free Ordering for Compressing Social Networks](https://papers-gamma.link/paper/105)

### 2017-2018:

- [Link Prediction in Graph Streams](https://papers-gamma.link/paper/101)

- [The Community-search Problem and How to Plan a Successful Cocktail Party](https://papers-gamma.link/paper/74)

- [A Nonlinear Programming Algorithm for Solving Semidefinite Programs via Low-rank Factorization](https://papers-gamma.link/paper/55)

- [Deep Learning](https://papers-gamma.link/paper/68)

- [Reducing the Dimensionality of Data with Neural Networks](https://papers-gamma.link/paper/65)

- [Representation Learning on Graphs: Methods and Applications](https://papers-gamma.link/paper/60)

- [Improved Approximation Algorithms for MAX k-CUT and MAX BISECTION](https://papers-gamma.link/paper/56)

- [Cauchy Graph Embedding](https://papers-gamma.link/paper/53)

- [Phase Transitions in Semidefinite Relaxations](https://papers-gamma.link/paper/57)

- [Graph Embedding Techniques, Applications, and Performance: A Survey](https://papers-gamma.link/paper/52)

- [VERSE: Versatile Graph Embeddings from Similarity Measures](https://papers-gamma.link/paper/48)

- [Hierarchical Clustering Beyond the Worst-Case](https://papers-gamma.link/paper/45)

- [Scalable Motif-aware Graph Clustering](https://papers-gamma.link/paper/18)

- [Practical Algorithms for Linear Boolean-width](https://papers-gamma.link/paper/40)

- [New Perspectives and Methods in Link Prediction](https://papers-gamma.link/paper/28/New%20Perspectives%20and%20Methods%20in%20Link%20Prediction)

- [In-Core Computation of Geometric Centralities with HyperBall: A Hundred Billion Nodes and Beyond](https://papers-gamma.link/paper/37)

- [Diversity is All You Need: Learning Skills without a Reward Function](https://papers-gamma.link/paper/36)

- [When Hashes Met Wedges: A Distributed Algorithm for Finding High Similarity Vectors](https://papers-gamma.link/paper/23)

- [Fast Approximation of Centrality](https://papers-gamma.link/paper/35/Fast%20Approximation%20of%20Centrality)

- [Indexing Public-Private Graphs](https://papers-gamma.link/paper/19/Indexing%20Public-Private%20Graphs)

- [On the uniform generation of random graphs with prescribed degree sequences](https://papers-gamma.link/paper/26/On%20the%20uniform%20generation%20of%20random%20graphs%20with%20prescribed%20d%20egree%20sequences)

- [Linear Additive Markov Processes](https://papers-gamma.link/paper/21/Linear%20Additive%20Markov%20Processes)

- [ESCAPE: Efficiently Counting All 5-Vertex Subgraphs](https://papers-gamma.link/paper/17/ESCAPE:%20Efficiently%20Counting%20All%205-Vertex%20Subgraphs)

- [The k-peak Decomposition: Mapping the Global Structure of Graphs](https://papers-gamma.link/paper/16/The%20k-peak%20Decomposition:%20Mapping%20the%20Global%20Structure%20of%20Graphs)

- [A Fast and Provable Method for Estimating Clique Counts Using Turán’s Theorem](https://papers-gamma.link/paper/24)

☆

1

Comments:

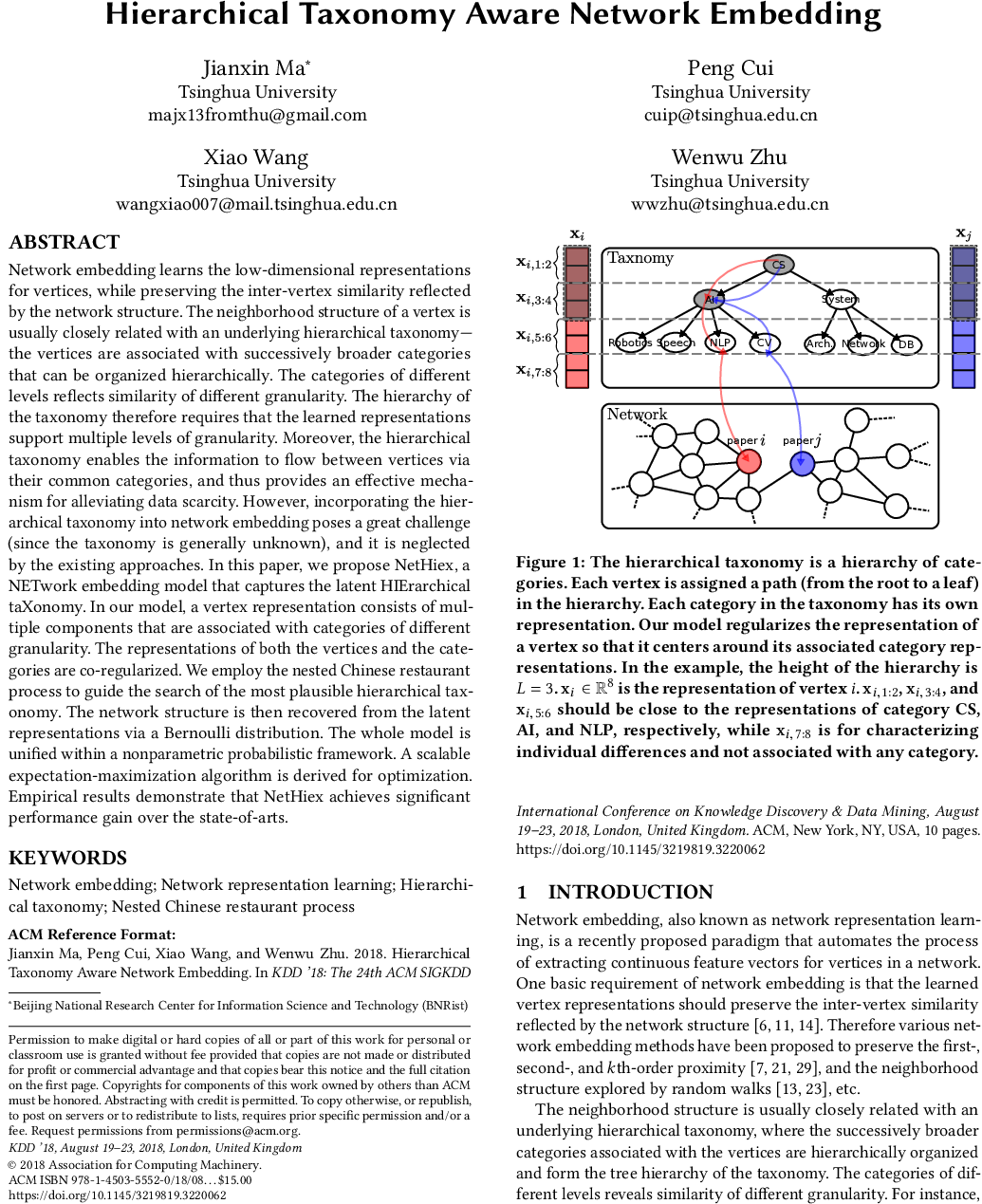

The idea of using a hierarchical clustering of nodes in order to guide network embedding is very nice!

### Related work:

Hierarchical clustering for network embedding has also been used in the two following papers:

- [HARP: Hierarchical Representation Learning for Networks](https://papers-gamma.link/paper/109)

- [MILE: A Multi-Level Framework for Scalable Graph Embedding](https://papers-gamma.link/paper/104)

### Scalability:

The largest graph used in the experiments has only 334k edges and the running time on that network is not reported. In figure 7, the method takes 2 minutes on a BA network of less than 10k nodes and 150k edges.

It would have been nice to report the running time on a graph of 1G edges in order to evaluate the scalability in a better way.

### Reproducibility:

The implementation does not seem to be publically available and as the proposed method is complicated, the results are hard to reproduce.

### Table 3:

It is interesting that the dot-product approach leads to the best results for link prediction in comparison with the 4 strategies used in node2vec. Usualy, Hadamard leads to the best results.

Comments to a comment:

1. Code is available at https://github.com/JianxinMa/jianxinma.github.io/blob/master/assets/nethiex-linux.zip at the moment. It will probably be put on http://nrl.thumedialab.com/ later.

Yeah the implementation is quite complicated. Still, a lot simpler than the variational inference for the nested chinese restaurant process (which I can't find any implementation).

2. Scalability shouldn't be a problem in theory (the time complexity is linear per iteration in theory). But the released code is not really optimized (not even multi-threaded or distributed).

3. It's possible for Hadamard to be worse than dot product, as it requires training a classifier and can over-fit if not tuned carefully or trained on a small sample.

☆

1

Comments:

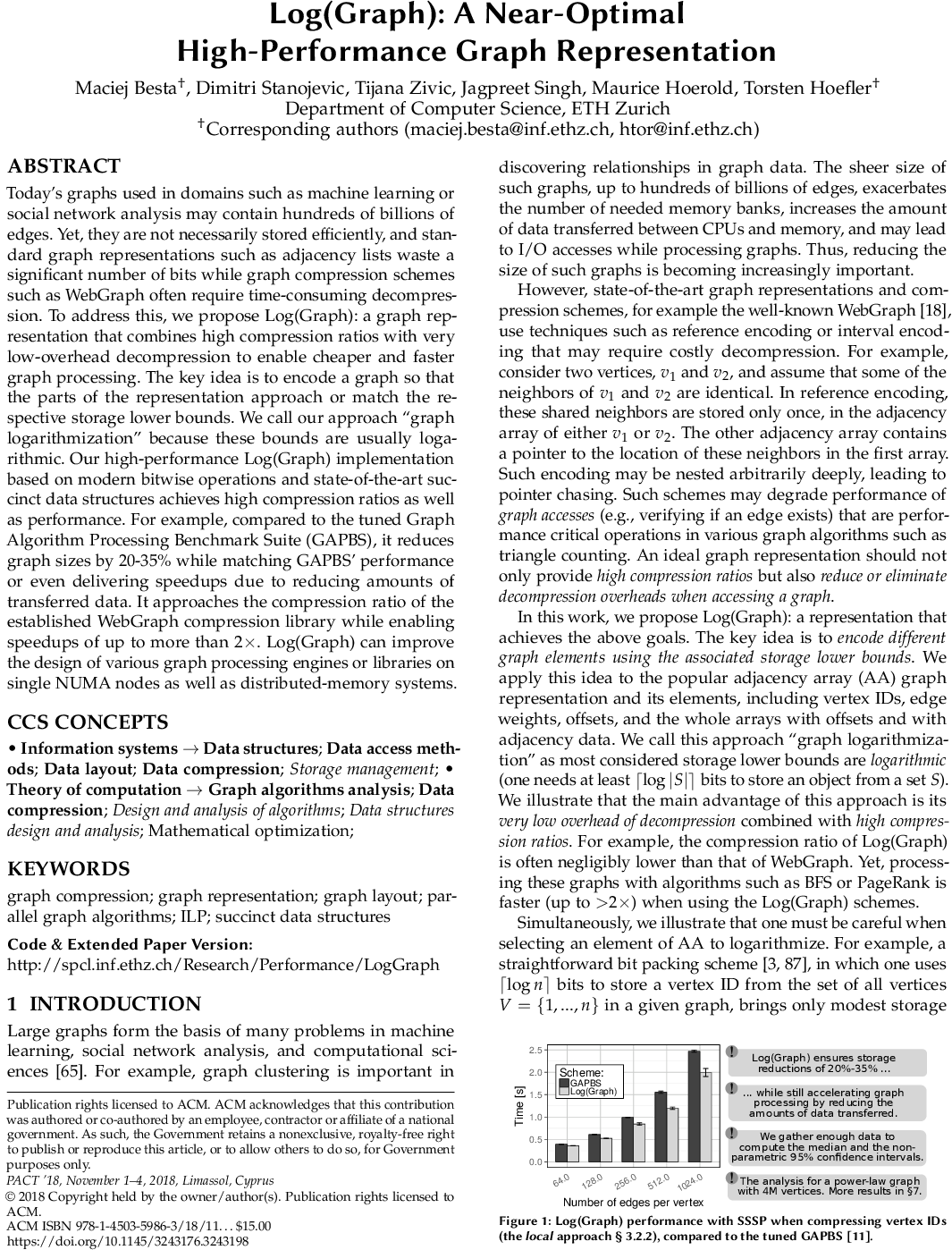

## Interesting paper!

Code is coming soon: http://spcl.inf.ethz.ch/Research/Performance/LogGraph/

The method can reduce the size needed to store the input graph by 35% compared to the adjacency array format, while not making computations slower.

Several compression frameworks are suggested in the paper. All of them use fixed length compression codes contrarily the WebGraph framework.

One of the compression frameworks leads to a space of $\sum_{v\in V} \log(\widehat{N_v})\cdot d_v+\log(\log(\widehat{N_v}))$ bits (cf. section 3.2, $\widehat{N_v}$ is the maximum ID of the neighbors of node $v$) as it uses $\log(\widehat{N_v})$ bits to store the $d_v$ neighbors of each node $v$ and $\log(\log(\widehat{N_v}))$ bits to store the length of the codes used for each node $v$. Note that an additional cost is needed in order to have a direct access to the neighbors of each node (this is done using succinct bit vectors (it seems to lead to a total number of bits close to $n\cdot \log(m)$)). The nodes can be renumbered such that the number of bits is minimized.

### Approaches the compression ratio of WebGraph

I read that the suggested compression method "approaches the compression ratio of the established WebGraph compression library". What does it mean exactly? The Webgraph compression library can compress web graphs using less than 2 bits per link. The suggested compression framework seems to be far from that compression ratio.

So I think that what is meant is that on graphs other than web graphs the suggested method approaches the compression ratio of the Webgraph library. Am I correct?

### Java VS C++

I read "We use the WebGraph original tuned Java implementation for gathering the data on compression ratios but, as Log(Graph) is developed in C++, we use a proof-of-a-concept C++ implementation of WG schemes for a fair C++ based performance analysis.".

I do not understand. This is because the original Java implementation is slower than a C++ implementation? Which C++ implementation was used for WG?

### Cost function in section 3.6

The quantity to minimize should be $\sum_{v\in V} \log(\widehat{N_v})\cdot d_v$ (cf. section 3.2, $\widehat{N_v}$ is the maximum ID of the neighbors of node $v$), that is intuitively minimizing $\widehat{N_v}$ for nodes with high $d_v$.

It is not clear to me why this other counter-intuitive objective is used: $\sum_{v\in V} \widehat{N_v}\cdot \frac{1}{d_v}$.

### Webgraph decompression impacts running time

I agree that referencing nodes can impact the running time (especially if there are chains of references). However, it is possible to use the WebGraph framework without using referencing, that is only using gap encoding with variable length instantaneous codes.

I think that doing that does not impact significantly the running time, does it?

### Typos and minors

- Section 4.2.: "There are exactly $Q$ bit vectors of length with n ones and the storage lower bound is $Q$ bits." -> the storage lower bound is $\log(Q)$ bits.

Some people discuss the paper (and troll the paper authors) on hackernews:

https://news.ycombinator.com/item?id=18081978

Comments: