Wellcome to Maximimi's library,

You can find here all papers liked or uploaded by Maximimitogether with brief user bio and description of her/his academic activity.

[Link to my homepage](https://sites.google.com/view/danisch/home)

## I will read the following papers.

- [Quasi-Succinct Indices](https://papers-gamma.link/paper/130)

- [PageRank as a Function of the Damping Factor](https://papers-gamma.link/paper/106)

- [Graph Stream Algorithms: A Survey](https://papers-gamma.link/paper/102)

- [Network Sampling: From Static to Streaming Graphs](https://papers-gamma.link/paper/122)

- [The Protein-Folding Problem, 50 Years On](https://papers-gamma.link/paper/78)

- [Computational inference of gene regulatory networks: Approaches, limitations and opportunitie](https://papers-gamma.link/paper/77)

- [Graph complexity analysis identifies an ETV5 tumor-specific network in human and murine low-grade glioma](https://papers-gamma.link/paper/79)

- [Gene Networks in Plant Biology: Approaches in Reconstruction and Analysis](https://papers-gamma.link/paper/76)

- [The non-convex Burer–Monteiro approach works on smooth semidefinite programs](https://papers-gamma.link/paper/80)

- [Solving SDPs for synchronization and MaxCut problems via the Grothendieck inequality](https://papers-gamma.link/paper/81)

- [Influence maximization in complex networks through optimal percolation](https://papers-gamma.link/paper/70)

- [Motifs in Temporal Networks](https://papers-gamma.link/paper/61)

- [Deep Sparse Rectifier Neural Networks](https://papers-gamma.link/paper/69)

- [Sparse Convolutional Neural Networks](https://papers-gamma.link/paper/67)

- [A fast and simple algorithm for training neural probabilistic language models](https://papers-gamma.link/paper/58)

- [Adding One Neuron Can Eliminate All Bad Local Minima](https://papers-gamma.link/paper/71)

## I read the following papers.

### 2019-2020

- [Fast and High Quality Multilevel Scheme for Partitioning Irregular Graphs](https://papers-gamma.link/paper/162)

- [Karp-Sipser based kernels for bipartite graph matching](https://papers-gamma.link/paper/160)

- [Speedup Graph Processing by Graph Ordering](https://papers-gamma.link/paper/159)

- [Tree Sampling Divergence: An Information-Theoretic Metric for Hierarchical Graph Clustering](https://papers-gamma.link/paper/154)

### 2018-2019:

- [SWeG: Lossless and Lossy Summarization of Web-Scale Graphs](https://papers-gamma.link/paper/139)

- [Smoothed Analysis: An Attempt to Explain the Behavior of Algorithms in Practice](https://papers-gamma.link/paper/129)

- [Are stable instances easy?](https://papers-gamma.link/paper/128)

- [Hierarchical Taxonomy Aware Network Embedding](https://papers-gamma.link/paper/116)

- [Billion-scale Network Embedding with Iterative Random Projection](https://papers-gamma.link/paper/110)

- [HARP: Hierarchical Representation Learning for Networks](https://papers-gamma.link/paper/109/)

- [Layered Label Propagation: A MultiResolution Coordinate-Free Ordering for Compressing Social Networks](https://papers-gamma.link/paper/105)

### 2017-2018:

- [Link Prediction in Graph Streams](https://papers-gamma.link/paper/101)

- [The Community-search Problem and How to Plan a Successful Cocktail Party](https://papers-gamma.link/paper/74)

- [A Nonlinear Programming Algorithm for Solving Semidefinite Programs via Low-rank Factorization](https://papers-gamma.link/paper/55)

- [Deep Learning](https://papers-gamma.link/paper/68)

- [Reducing the Dimensionality of Data with Neural Networks](https://papers-gamma.link/paper/65)

- [Representation Learning on Graphs: Methods and Applications](https://papers-gamma.link/paper/60)

- [Improved Approximation Algorithms for MAX k-CUT and MAX BISECTION](https://papers-gamma.link/paper/56)

- [Cauchy Graph Embedding](https://papers-gamma.link/paper/53)

- [Phase Transitions in Semidefinite Relaxations](https://papers-gamma.link/paper/57)

- [Graph Embedding Techniques, Applications, and Performance: A Survey](https://papers-gamma.link/paper/52)

- [VERSE: Versatile Graph Embeddings from Similarity Measures](https://papers-gamma.link/paper/48)

- [Hierarchical Clustering Beyond the Worst-Case](https://papers-gamma.link/paper/45)

- [Scalable Motif-aware Graph Clustering](https://papers-gamma.link/paper/18)

- [Practical Algorithms for Linear Boolean-width](https://papers-gamma.link/paper/40)

- [New Perspectives and Methods in Link Prediction](https://papers-gamma.link/paper/28/New%20Perspectives%20and%20Methods%20in%20Link%20Prediction)

- [In-Core Computation of Geometric Centralities with HyperBall: A Hundred Billion Nodes and Beyond](https://papers-gamma.link/paper/37)

- [Diversity is All You Need: Learning Skills without a Reward Function](https://papers-gamma.link/paper/36)

- [When Hashes Met Wedges: A Distributed Algorithm for Finding High Similarity Vectors](https://papers-gamma.link/paper/23)

- [Fast Approximation of Centrality](https://papers-gamma.link/paper/35/Fast%20Approximation%20of%20Centrality)

- [Indexing Public-Private Graphs](https://papers-gamma.link/paper/19/Indexing%20Public-Private%20Graphs)

- [On the uniform generation of random graphs with prescribed degree sequences](https://papers-gamma.link/paper/26/On%20the%20uniform%20generation%20of%20random%20graphs%20with%20prescribed%20d%20egree%20sequences)

- [Linear Additive Markov Processes](https://papers-gamma.link/paper/21/Linear%20Additive%20Markov%20Processes)

- [ESCAPE: Efficiently Counting All 5-Vertex Subgraphs](https://papers-gamma.link/paper/17/ESCAPE:%20Efficiently%20Counting%20All%205-Vertex%20Subgraphs)

- [The k-peak Decomposition: Mapping the Global Structure of Graphs](https://papers-gamma.link/paper/16/The%20k-peak%20Decomposition:%20Mapping%20the%20Global%20Structure%20of%20Graphs)

- [A Fast and Provable Method for Estimating Clique Counts Using Turán’s Theorem](https://papers-gamma.link/paper/24)

☆

1

Comments:

The method uses random projections of the column vectors of the matrix $S=(\alpha_0 I+\alpha_1 A+\alpha_2 A^2+...+\alpha_q A^q)$. It is shown that such a random projection is a heuristics to minimize a relevant cost function (equation (2)) with some guarantees (Theorem 1).

As only the products of a set of random vectors $R$ with the matrix $S$ is needed, explicitly forming the (potentially dense) matrix $S$ is avoided: only the vectors $A^p\cdot R$ are computed. The running time is $O(n\cdot d^2+ m\cdot q\cdot d)$, where $m$ is the number of edges, $n$ is the number of nodes, $d$ is the dimensions of the embedding (and the number of random vectors in $R$) and $q$ is the order of the embedding (cf formula of $S$). The operations involved are very simple leading to a very fast practical algorithm.

It is shown in the experiments that these simple ideas lead to the most scalable embedding method and that the method is still accurate for some machine learning tasks such as classification, link prediction, and network reconstruction.

The executable in MATLAB is publicly available here: https://github.com/ZW-ZHANG/RandNE

An alternative open source implementation in C is available here: https://github.com/maxdan94/RandNE

### Concerns regarding Theorem 3

"Theorem 3. The time complexity of Algorithm 3 is linear with respect to the number of changed nodes and the number of changed edges respectively."

In the proof, the running time proven is in $O(M'\cdot R(q))$ (where $M'$ is the number of new or disappeared edges), the "$R(q)$" is the $q$-step neighborhood of an edge. We can have $R(q)=O(N)$ for some graphs especially in real-world graphs where the average distance between two nodes is very small (say less than 4) https://research.fb.com/three-and-a-half-degrees-of-separation/. The theorem is thus not correct without stating further assumptions.

In addition, in the experiments, the running time of the suggested algorithm for dynamic graphs (Algorithm 3) is not compared to the static algorithm (Algorithm 1) re-run from scratch after each update. It is not clear to me if the gain in time is significant.

### I do not understand 5, C, 1

"Network Reconstruction: The experimental setting is similar to moderate-scale networks (Section V-B2), i.e. we rank pairs of nodes according to their inner product similarities and reconstruct the network. We report the AUC scores in Table V."

There are approximately $\approx 10^{16}$ such pairs of nodes. This number of pairs of nodes is too large, I do not understand how the pairs can be ranked and the AUC computed.

### Parametters tuning

The method needs many parameters: $d$, $q$, $a_0$, $a_1$, ..., $a_q$. Whyle $d$ and $q$ can be arbitrary fixed to say $d=128$ and $q=3$ (as done in the paper). It is not clear what default values would fit for the $a_k$'s.

In the paper, the $a_k$'s are found using grid search. Note that the range of values and the steps are not specified in addition the optimal values are not given. This hurts reproducibility.

What if grid search is not possible (e.g. no train set)? Which default value would make sense?

### Grid search and unfair comparison

In the experiments, the suggested embedding is shown to lead to better results for some tasks (such as network reconstruction, classification and link prediction) compared to other embedding methods. However, the suggested method has parameters ($a_0$, $a_1$, $a_2$ and $a_3$ since $q$ is set to $3$). These parameters are tuned using grid search, while some of the parameters of the other methods seem to be kept to default. This may not be a very fair comparison.

### Real billion-scale networks?

I read "We also report the exact running time in Table

VII. It shows that RandNE can learn all the node embeddings

of WeChat within 7 hours with 16 normal servers, which is

promising for real billion-scale networks."

I do not understand what is meant. WeChat has 250M nodes and 4.8G edges. The number of edges is thus in the order of billions. "Real billion-scale networks" means more than 1G nodes and not edges?

Another graph with 1G edges is available here: http://snap.stanford.edu/data/com-Friendster.html

In addition, contrary to WeChat, nodes have some labels that could be used to test the relevance of the embedding.

Graphs with billion nodes are available here: http://law.di.unimi.it/datasets.php

Unfortunately, they are directed...

Another one is here: https://sparse.tamu.edu/Sybrandt/MOLIERE_2016 undirected, but weighted.

A twitter graph with 25G edges can be obtained upon request: https://hal.inria.fr/hal-00948889/en/

###Typos and minors:

- "as an theoretical guarantee"

- "an one-vs-all logistic regression"

- This relevant WWW2018 paper is not cited and the associated method is not used for comparisons: https://papers-gamma.link/paper/48

☆

1

Comments:

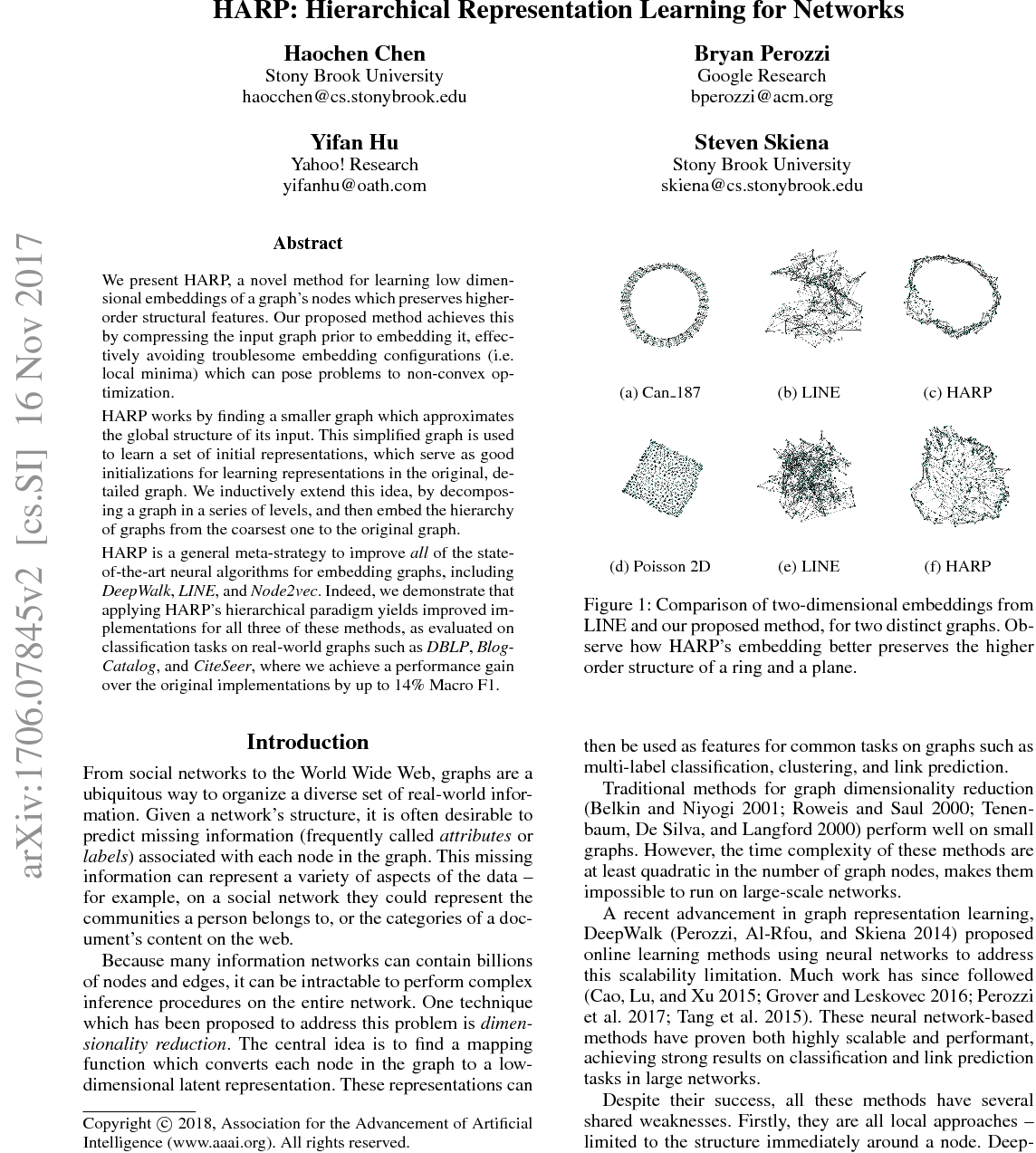

The idea of coarse-graining the graph by merging "similar" nodes (using edge collapsing or star collapsing) before applying a graph embedding method is interesting. Bringing that idea to the next level by building a hierarchy of coarse-grained graphs is also relevant. The hierarchy of coarse-grained graphs allows finding a better initialization for embedding methods relying on deep learning.

The two ways of collapsing (edge collapsing or star collapsing) are very simple and more sophisticated ways are not suggested: for instance based on modular decomposition or community detection. Maybe such more advanced ways lead to even better results.

The experimental section shows that coarse-graining the input graph before applying a graph embedding methods leads to a "better" embedding. Indeed, using such an embedding improves the performance of the classification task compared to using the embedding obtained without coarse-graining. However, neither a theoretical justification of this fact is provided, nor an intuition.

How about other machine learning tasks? For instance, does the suggested method also help for clustering and link prediction?

The method does not make the embedding faster even though this might have been expected since the graphs are smaller.

Figure 4: I do not understand in what the various level as relevant. To me, no level looks like the wished embedding.

The graphs used in the experiments are extremely small (less than 30k nodes). It is not clear how the method would perform on large and sparse graphs, say 1G edges 100M nodes (for instance these: http://snap.stanford.edu/data/#communities).

Comments: