☆

1

Comments:

### General:

A very simple and very scalable algorithm with theoretical guarantees to compute an approximation of the closeness centrality for each node in the graph.

Maybe the framework can be adapted to solve other problems. In particular, "Hoeffding's theorem" is very handy.

### Parallelism:

Note that the algorithm is embarrassingly parallel (the loop over the $k$ reference nodes can be done in parallel with almost no additional work).

### Experiments:

It would be nice to have some experiments on large real-world graphs.

An efficient (and parallel) implementation of the algorithm is available here: https://github.com/maxdan94/BFS

### Related work:

More recent related work by Paolo Boldi and Sebastiano Vigna:

- http://mmds-data.org/presentations/2016/s-vigna.pdf

- https://arxiv.org/abs/1011.5599

- https://papers-gamma.link/paper/37

It also gives an approximation of the centrality for each node in the graph and it also scales to huge graphs. Which algorithm is "the best"?

☆

2

Comments:

Just a few remarks from me,

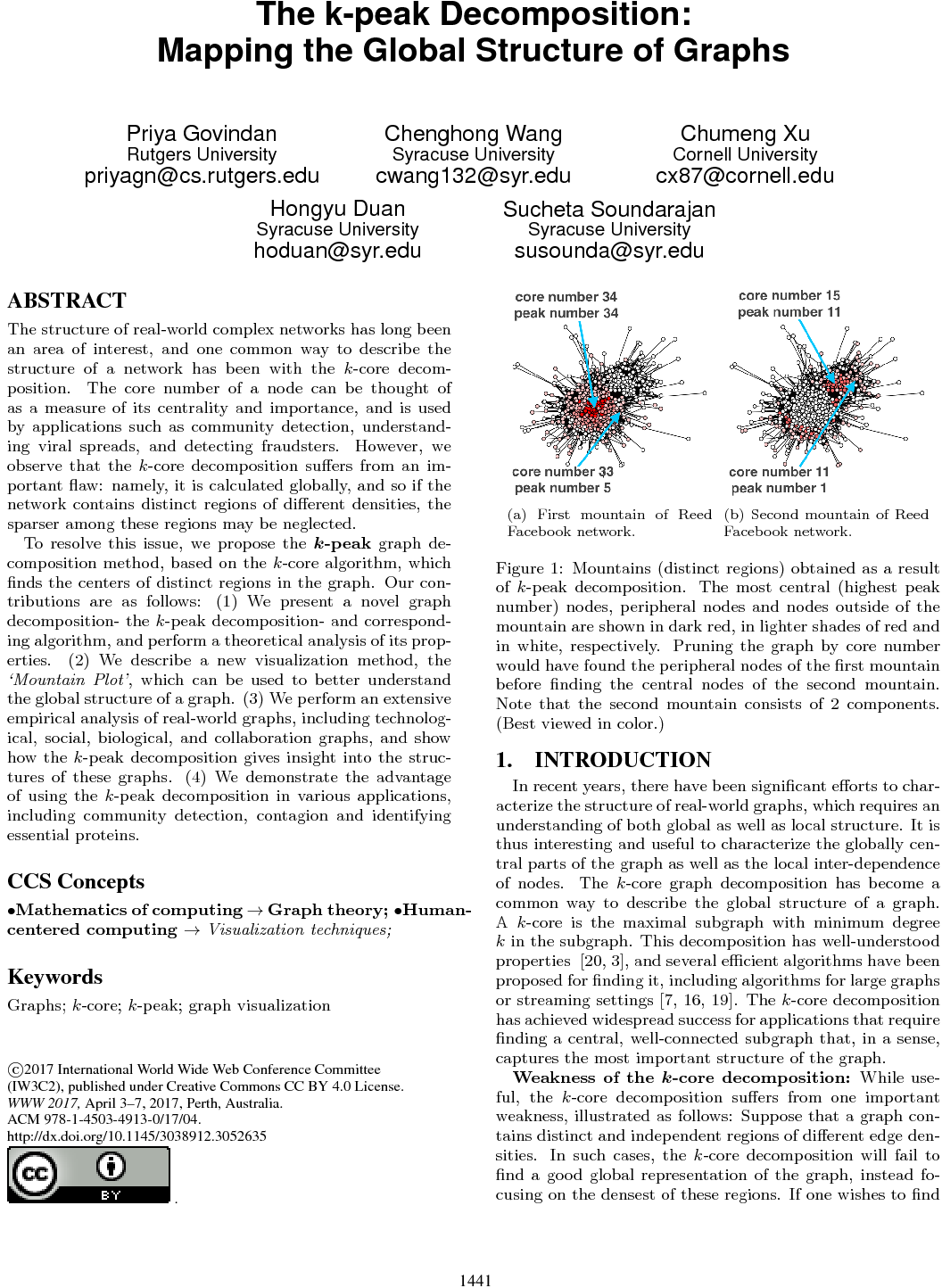

### Definition of mountain

Why is the k-peak in the k-mountain ? Why is the core number of a k-peak changed when removing the k+1 peak ?

###More informations on k-mountains

Showing a k-mountain on figure 2 or on another toy exemple would have been nice.

Besides, more theoretical insights on k-mountains are missing : complexity in time and space of the algorithm used to find the k-mountains ?

How many k-mountains include a given node ?

Less than the degeneracy of the graph but maybe it's possible to say more.

Regarding the complexity of the algorithm used to build the k-mountains, I'm Not sure I understand everything but when the k-peak decomposition is done, for each k-contour, a new k-core decomposition must be run on the subgraph obtained when removing the k-contour. Then it must be compared whith the k-core decomposition of the whole graph. All these operations take time so the complexity of the algorithm used to build the k-mountains is I think higher than the complexity of the k-peak decomposition.

Besides multiple choices are made for building the k-mountains, it seems to me that this tool deserves a broader analysis.

Same thing for the mountains shapes, some insights are missing, for instance mountains may have an obvious staircase shape, does it mean something ?

###6.2 _p_

I don't get really get what is p?

###7.2 is unclear

but it may be related to my ignorance of what is a protein.

### Lemma 2 (bis):

Lemma 2 (bis): Algorithm 1 requires $O(\delta\cdot(N+M))$ time, where $\delta$ is the degeneracy of the input graph.

Proof: $k_1=\delta$ and the $k_i$ values must be unique non-negative integers, there are thus $\delta$ distinct $k_i$ values or less. Thus, Algorithm 1 enters the while loop $\delta$ times or less leading to the stated running time.

We thus have a running time in $O(\min(\sqrt{N},\delta)\cdot (M+N))$.

Note that $\delta \leq \sqrt{M}$ and $\delta<N$ and in practice (in large sparse real-world graphs) it seems that $\delta\lessapprox \sqrt{N}$.

### k-core decomposition definition:

The (full) definition of the k-core decomposition may come a bit late. Having an informal definition of the k-core decomposition (not just a definition of k-core) at the beginning of the introduction may help a reader not familiar with it.

### Scatter plots:

Scatter plots: "k-core value VS k-peak value" for each node in the graph are not shown. This may be interesting.

Note that scatter plots: "k-core value VS degree" are shown in "Kijung, Eliassi-Rad and Faloutsos. CoreScope: Graph Mining Using k-Core Analysis. ICDM2016" leading to interesting insights on graphs. Something similar could be done with k-peak.

###Experiments on large graphs:

As the algorithm is very scalable: nearly linear time in practice (on large sparse real-world graphs) and linear memory. Experiments on larger graphs e.g. 1G edges could be done.

### Implementation:

Even though the algorithm is very easy to implement, a link to a publicly available implementation would make the framework easier to use and easier to improve/extend.

### Link to the densest subgraph:

The $\delta$-core (with $\delta$ the degeneracy of the graph) is a 2-approximation of the densest subgraph (here the density is defined as the average degree divided by 2) and thus the core decomposition can be seen as a (2-)approximation of the density friendly decomposition.

- "Density-friendly graph decomposition". Tatti and Gionis. WWW2015.

- "Large Scale Density-friendly Graph Decomposition via Convex Programming". Danisch et al. WWW2017.

Having this in mind, the k-peak decomposition can be seen as an approximation of the following decomposition:

- 1 Find the densest subgraph.

- 2 Remove it from the graph (along with all edges connected to it).

- 3 Go to 1 till the graph is empty.

### Faster algorithms:

Another appealing feature of the k-core decomposition is that it is used to make faster algorithm. For instance, it is used in https://arxiv.org/abs/1006.5440 and to https://arxiv.org/abs/1103.0318 list all maximal cliques efficiently in sparse real-world graphs.

Can the k-peak decomposition be used in a similar way to make some algorithms faster?

### Section 6.2 not very clear.

### Typos/Minors:

- "one can view the the k-peak decomposition"

- "et. al", "et al"

- "network[23]" (many of these)

- "properties similar to k-core ."

☆

1

Comments:

## About scalability:

"We apply a standard cleaning procedure (for similarity) and remove high out-degrees. In other words, if some vertex v has more than 10K followers (outdegree > 10K), we remove

all these edges. (We do not remove the vertex, but rather only its out-edges.) Intuitively, the fact that two vertices are followed by v is not a useful signal for similarity. In

flock and friendster, such vertices are typically spammers and should be ignored. For webgraphs, a page linking to more than 10K other pages is probably not useful for similarity

measurement."

- Typo: should be "more than 10K followeEs (outdegree > 10K)" not "followers".

- Such a cleaning procedure makes sense, in addition, it has a valuable side effect: in practice, after applying such a cleaning procedure, many pairs of nodes with very small non-zero similarity (in the original directed graph) have a similarity of zero (in the new graph with the out-edges of the out-hubs removed).

- Given that such a cleaning procedure is applied, a brute force approach (that computes the similarity of each pair of nodes sharing at least one in-neighbor) is scalable to a large extent.

- The following C code and experiments support the previous claim: https://github.com/maxdan94/cosineSparseMatrix

- If scalability is still an issue, in-edges of nodes with very high in-degree could also be removed.

"In our example, this turns out to be more than 100 trillion triples. This is infeasible even for a large industrial-strength cluster.".

Listing 100 trillion triples seems doable with a medium-strength cluster.

"If a matrix A has a 100 billion non-zeroes, it takes upwards of 1TB just to store the entries. This is more than an order of magnitude of the storage of a commodity machine in a cluster. Any approach of partitioning A into submatrices cannot scale."

Indeed, memory seems to be a problem. However, it is unclear why an approach partitioning A into submatrices cannot scale.

## Dataset:

"The dataset friendster is a social network, and is available from the Stanford Large Network Dataset Collection [28]."

It is unclear which friendster dataset is used. In SNAP, http://snap.stanford.edu/data/com-Friendster.html seems to be "undirected" and has 1.8B edges, not "1.6B edges" as written in Table 1 (even after removing edges of nodes with degree larger than 10,000).

## Typos and minors:

- Inside the paper $O(100B)$ looks a bit strange, because according to [the definition](https://en.wikipedia.org/wiki/Big_O_notation#Formal_definition) the set $O(100B)$ is equal to $O(0.00001)$

- should be "more than 10K followeEs (outdegree > 10K)" not "followers".

Comments: