☆

2

Comments:

One of my favorite papers.

Related to [Axioms for centrality](https://papers-gamma.link/paper/54)

☆

1

Comments:

Un jour, j'étais très heureux d'avoir été invité par Brigitte Vallée

au [Séminaire Algorithmique de GREYC](https://clementj01.users.greyc.fr/semalgo/) afin

de présenter nos travaux, intitulées [Patterns in treeshelves](https://kirgizov.link/publications/boxed_prod_DM.pdf).

Le soir du 28 Février 2017, après le séminaire, dans le train de Caen à

Dijon via Paris je pensais à la façon dont nous pouvons à partir de

chemins de Dyck afin de construire les graphes avec une certaine structure de

clusters-communautés. A mi-chemin vers cet objectif, et à mi-chemin

vers Dijon, j'ai trouvé une sous-classe très intéressante de chemins

de Dyck comptés par les nombres de Motzkin ! Ce voyage et les

discussions ultérieures avec Jean-Luc Baril et Armen Petrossian ont

donné naissance à nouvel article.

Feel free to discuss this paper that was recently accepted to publication in [Discrete Mathematics Jounral](https://en.wikipedia.org/wiki/Discrete_Mathematics_%28journal%29).

The paper is [published](https://www.sciencedirect.com/science/article/pii/S0012365X18300670)!

Il s’agit d’un très jolie classe de chemins de Dyck énumérés par les nombres de Motzkin. 👍🏼👍🏼👍🏼

☆

2

Comments:

### Very nice application to graph classification / clustering:

Frequencies of motifs are graph's features that can be plugged in a Machine Learning algorithm in order to perform classification or clustering of a set of graphs.

### Very nice application to link prediction / recommendation:

page 8 "Trends in pattern counts". Some motifs are rarely observed as induced motifs. Thus, if such a motif is seen at time $t$ in a dynamic graph, then an edge between a pair of its vertices might be created (or be deleted) at time $t+1$.

Note that the framework only counts motifs without listing/enumerating them. Some adaptations are thus needed to deal with link prediction and recommendation.

### Application to graph generation:

Generate a random graph with a given number of each of the 21 possible 5-motifs.

### Some statements might be a little too strong:

- "As we see in our experiments, the counts of 5-vertex patterns are in the orders of billions to trillions, even for graphs with a few million edges. An enumeration algorithm is forced to touch each occurrence, and cannot terminate in a reasonable time."

- The framework is absolutely critical for exact counting, since enumeration is clearly infeasible even for graphs with a million edges. For instance, an autonomous systems graph with 11M edges has $10^{11}$ instances of pattern 17 (in Fig. 1)."

- "For example, the tech-as-skitter graph has 11M edges, but 2 trillion 5-cycles. Most 5-vertex pattern counts are upwards of many billions for most of the graphs (which have only 10M edges). Any algorithm that explicitly touches each such pattern is bound to fail."

The following C code generates a program that counts from 1 to one trillion. This program terminates within 30 minutes on a commodity machine (with -O3 option for the compiler optimization).

```c

main() {

unsigned long long i=0;

while (i < 1e12) {

i++;

}

}

```

This shows that "touching" billions or trillions of motifs might actually be possible in a reasonable amount of time.

### Minors:

- "Degeneracy style ordering" might be confusing as a degree ordering is used and not a degeneracy ordering (i.e. k-core decomposition).

- Figure 7: "The line is defined by...", should be "The prediction is defined by..." as the line is just "$y=x$".

### Typos:

- "they do not scale graphs of such sizes."

- "For example, the 6th 4-pattern in the four-clique and the 8th 5-pattern is the five-cycle."

- "and $O\big(n+m+T(G)$ storage."

- "An alternate (not equivalent) measure is the see the fraction of 4-cycle"

- "an automonous systems graph with"

### Others:

- https://oeis.org/A001349

- Is it possible to generalize the framework to any k?

A program for listing all k-motifs: https://github.com/maxdan94/kmotif

☆

1

Comments:

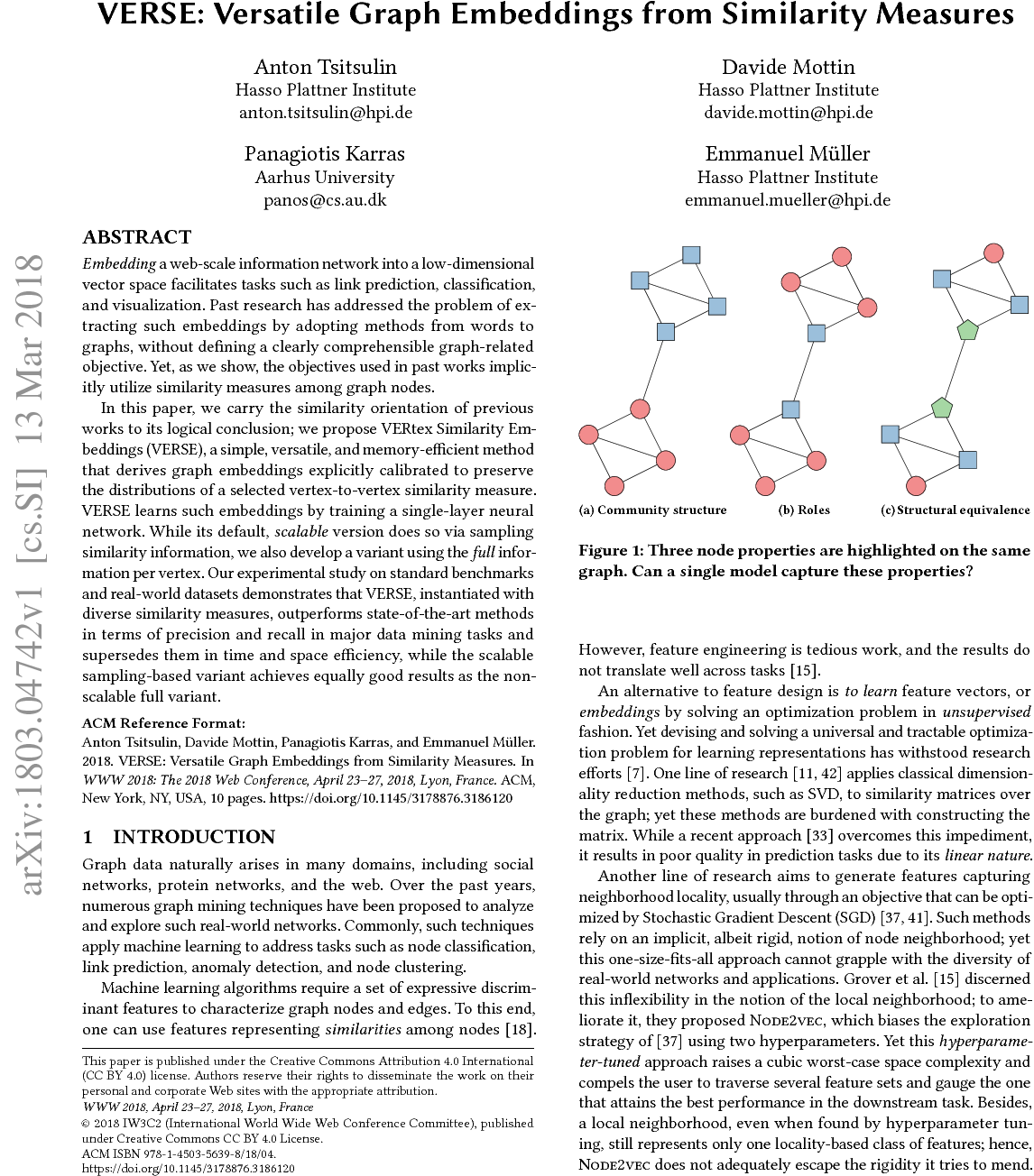

### Nice validation of the obtained embedding:

The obtained embedding seems useful for: (i) node clustering, (ii) link prediction and (iii) node classification.

### Concerns about the scalability of Louvain:

The Louvain algorithm can process Orkut in a few seconds on a commodity machine, contrary to what Table 2 suggests. The obtained modularity is of 0.68 such as written in Table 3 (the table presenting the dataset). The value of 0.68 is much more than the modularity obtained thanks to embeddings and k-means.

### Table 2 reporting the average and worst case running time is confusing:

In Table 2, $\Theta$ is used to denote the average running time and $O$ is used to denote the worst case running time. This is very confusing as worst-case running time can be given in terms of big-oh or theta.

Definitions are given here: https://en.wikipedia.org/wiki/Big_O_notation

It is also not clear how these asymptotic complexities were obtained, in particular, the asymptotic time and memory complexities of the suggested algorithm are not proven.

### VERSE Embedding Model

"We define the unnormalized distance between two nodes u,v in the embedding space as the dot product of their embeddings Wu·W⊤v. The similarity distribution in the embedded space is then normalized with softmax."

What is the intuition behind the softmax?

> Perhaps, an interesting research direction would be something like embedding-guided modularity optimization.

Maybe using the modularity-matrix (https://en.wikipedia.org/wiki/Modularity_(networks)#Matrix_formulation) as the similarity matrix used in VERSE?

This matrix can have negative entries though...

Hi!

> If you see the matrix $W$ used in LINE as the similarity matrix you use in VERSE, then yes I think that what I have suggested is similar to LINE. So VERSE can be seen as using LINE on the input similarity matrix? Is that correct?

VERSE can be seen as going beyond this ;)

If we just plug similarities into LINE training objective, we observe that (Fig. 3, NS line) the objective does not recover similarities at all! This is very surprising, and suggests that current embedding methods such as node2vec or LINE do not optimize the actual objective they claim to optimize. When I discovered that, I thought this is just a bug in the code, so I have made a version of VERSE that optimizes the random walk based similarity from DeepWalk. It turned out that DeepWalk does not preserve the similarity ranking of these walks.

> I've missed that. You mean that in LINE, "negative sampling" is used for the optimization; while in VERSE, "Noise Contrastive Estimation" is used?

Yes! To overcome the aforementioned problem, we have proposed an NCE-based sampling solution. It seems to work much better than plain negative sampling, and show that it is close to the full neural factorization of the matrix (which is only possible for VERSE model)!

>

>> Perhaps, an interesting research direction would be something like embedding-guided modularity optimization.

>

> Maybe using the modularity-matrix (https://en.wikipedia.org/wiki/Modularity_(networks)#Matrix_formulation) as the similarity matrix used in VERSE?

> This matrix can have negative entries though...

Yes, modularity matrix would probably not suit the algorithm well, as it is not probabilistic per node. Maybe some reformulation would help, I am not aware of any to this day.

Comments: