Wellcome to Alt-Tab's library,

You can find here all papers liked or uploaded by Alt-Tabtogether with brief user bio and description of her/his academic activity.

Some information about this user

☆

0

Comments:

### Short summary

##### Plan

- procedure for article selection

- recommender systems overview

- review itself: diversity measure ; impact of diversification on recommendation ; diversification methods

- conclusion and perspectives

##### Procedure for article selection

- search on Google Scholar with keywords selection

- doubles elimination

- selection of articles without additional payments

- clustering into three groups of articles (based on review plan)

##### RS overview (standard, aiming at people new to the field)

- dates back to Salton and McGill, 1980 (ref 1)

- usual standard techniques: word vectors, DT, Naïve Bayes, kNN, SVM

- applications: digital TV, web multimedia (YouTube, Shelfari (now merged into Goodreads), Facebook, Goodreads), personalized ads, online shopping

- the general process of recommendation: past users activity collection ; create user model ; present recommendation information ; feedback collection (distinguishing explicit and implicit recommendation)

- important challenges: data sparsity (working with "mostly empty user-items datasets") ; cold start (new users or items in the dataset ; overfitting (actually, rather in the sense of overspecialization)

##### Diversification

- Table 1 summarizes diversity measures

- Bradley-Smyth 2001: average dissimilarity between all pairs of items

- Fleder-Hosanagar 2007: Gini - explore with a model how diversity evolves through recommendation cycles

- Clarke et al. 2008: combined measure (ambiguity, redundancy, novelty...)

- Vargas et al. 2011: intralist diversity

- Hu-Pu 2011: perceived diversity (questionnaire)

- Vargas et al. 2012: in the line of Clarke et al. 2008

- Castagnos et al. 2013: in the line of Bradley-Smyth 2001 - develop experiments with users

- L'Huillier et al. 2014: idem

- Vargas et al. 2014: binomial diversity (mixing coverage and redundancy)

- Table 2 summarizes how diversity affects recommendation

- usually: F-measure, MAE, NMAE

- some articles show that diversification by reranking is possible without affecting too much accuracy (ex: Adomavicius et Kwon, 51)

- some address the question of trade-off between diversity and accuracy (52: Hurley-Zhang 2011, 55: Aytekin-Karakaya 2014, 56: Ekstrand et al, 2014, 57: Javari-Jalili, 2014)

- pb seen as multi-objective, looking for Pareto efficient ranking (58: Ribeiro et al 2015)

- Table 3 summarizes diversification algorithms

- many methods are reranking from accuracy-based ranking (59: Ziegler et al. 2005, 51 et 61: Adomavicius-Kwon 2012, 2011, 62: Premchaiswadi et al 2013)

- then various strategies, depending on the method, on the type of data, whether the authors question temporal aspects

- underlying idea is that the original algorithm (typically CF) is already diverse, just needs reordering

##### Conclusions

- no consensus on a diversity metric

- increasing diversity does not necessarily means sacrifice accuracy

- various challenges: not enough live studies ; work in psychology would be useful ; how to use systems which have a lot of different types of items ; how to diversify during the reco process (and not a posteriori)

☆

0

Comments:

# In short

CIKM 2015 article, one of the core reference on HIN-based recommendation, they consider a weighted variation which allows them to deal with rated cases.

# Summary

### 1. Introduction

##### context and problem

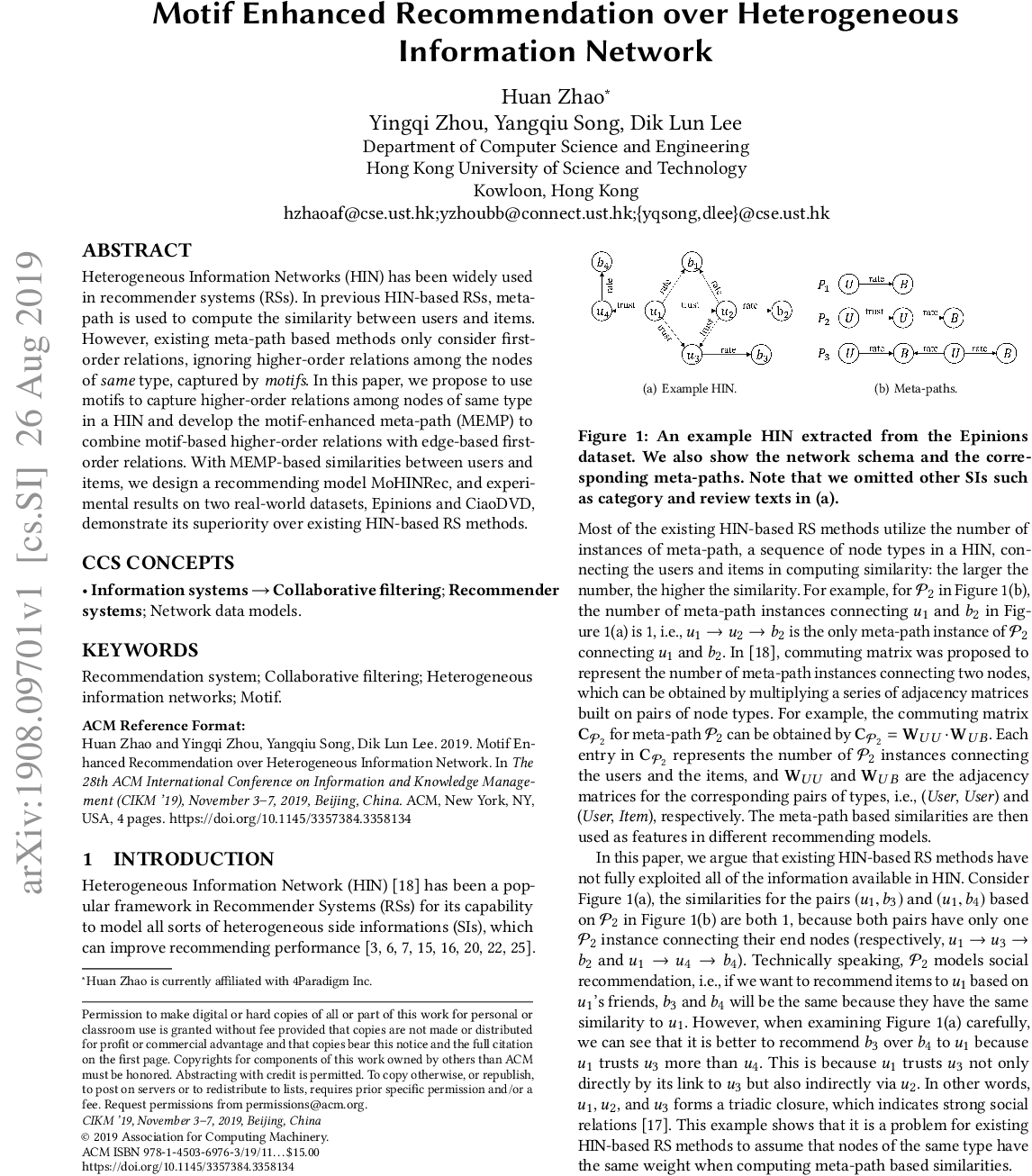

- more and more research on HIN for data mining: similarity search, clustering, classification

- recently on reco: 2 (Jamali et al), 7 (Luo et al), 15 (Sun et al): interesting because possibility to integrate various kind of information (cf Fig 1), ex: user-movie-user is more or less a CF model

- pb1: no values on links while recommendations are often associated with ratings (ex: Tom and Mary on Fig1, who have seen different movies but very different ratings) => necessary to generalize the formalism of HIN in order to account for the link weights

- pb2: problem to combine information from different meta-paths, a good method of weight learning should allow to obtain personalized weights, weights should contribute to explanation, but if we personalize recommendation data sparsity problems get worst

- contributions: extend HINs to weighted case ; propose SemRec (semantic path based RS) which flexibly include information of heterogeneous nature ; define consistency rule of similar users to circumvent data sparsity pb ; empirically study 2 datasets: Yelp and Douban

### 2. Heterogeneous network framework for recommendation

##### 2.1 Basic concepts

- HIN for the weighted case (as usual, but with weights on one or more relation) ; illustration from Fig 2a

- extended meta-paths to paths with attributes: links weights must be in a given range (give illustration)

##### 2.2 Recommendation on HIN

- Table1: semantic example associated to meta-paths

- Discussion about how different RS models will use meta-paths

##### 2.3 Similarity measure based on weighted meta-path

- go through literature reco models based on paths in HIN (but no WHIN): PathSim (12), PCRW (4), HeteSim(10) ; cannot be simply transposed as they have no notion of weight

- they adapt to this context with "atomic meta path": meta-path where weights take specific values

- illustration on Fig3 on the score decomposition in the same fashion as PathSim (which counts meta-paths) ; notice the normalization step

### 3. The SemRec solution

##### 3.1 Basic idea

- principle: evaluate similarity between users based on weighted and unweighted MP then infer scores from similar users

- preference weights are given to different MP

- difficulties: combine recommendations generated by different MP

- pb1: important bias due to the fact that some types of paths are more numerous than others => similarity based on paths does not necessarily reflect similarity between objects => some kind of normalization to avoid that

- pb2: we should personalize recommendations for better efficiency, but sparser data => recommendation by user groups with same preferences

##### 3.2 Recommendation with a single path

- presentation of the method for one path (before generalizing)

- supposing ratings from 1 to N

- we have user similarity matrix for this type of specific path

- compute Q_u,i,r: intensity of user u evaluating r item i from the similarity sum between users according to meta-path P_l

- score predicted is the weighted average of ratings over Q_u,i,r

##### 3.3 Recommendation with multiple paths

- now if we use several MP...

- 3.3.1: compute weights to ratings corresponding to each type of path by minimizing mean squared error between actual and predicted scores

- 3.3.2: personalized learning: each user as a weight vector, then same principle: minimizing MSE but with different weights with different users

- 3.3.3: add a regularization process: learning difficult when we have few data => we use similar users ; regularization term to have the weight of a user similar to its neighbors average weight => eq 9 general form of the optimization goal ; optimization by projected gradient until convergence

##### 3.4 Discussion

- general form of the objective function is L_3 in eq9

- if parameter lambda_1=0 => L_2 (equation 6)

- if parameter lambda_1=infinity => L_1 (equation 4)

- lambda_1 controls the level of personalization (the lower, the more personalized)

- complexity analysis

### 4. Experiments

##### 4.1 Datasets

- Douban (13400 u, 12700 i (= movies), 1M ratings de 1 à 5)

- Yelp (16200 u, 14300 i (= buisnesses), 200K ratings de 1 à 5)

- Douban clearly denser than Yelp - cf Tab.2

##### 4.2 Metrics

- accuracy evaluated with RMSE and MAE

##### 4.3 Comparison methods

- 4 variants of their own model: SemRecReg (comprehensive), SemRecSgl (one type of MP), SemRecAll (same weight for everyone), SemRecInd (personalized weights, but no regularization (?))

- and methods from the literature: PMF (classic MF), SMF (PMF + reg), CMF (MF with HIN structure), HeteMF (MF + reg based on entity similarity)

- no MP longer than 4

##### 4.4 Effectiveness experiments

- different settings training/test: 20/80, 40/60, 60/40, 80/20 for Douban ; 60/40, 70/30, 80/20, 90/10 for Yelp (because sparser)

- Tab4: SemRecReg always have better performances in all conditions

- other trends: SemRec with multiple paths in general better than SemRec with simple paths ; sparsity implies that SemRecInd is worse than SemRecAll in most circumstances (maybe I misunderstood something before) ; regularization has beneficial effects

##### 4.5 Study on cold start problem

- cold start translated here by smaller training and larger test

- Fig4: SemRecReg is clearly more performing in this context

##### 4.6 Study of weight preferences

- explore the importance of weighted meta-paths (versus unweighted)

- we can give some "slack" by imposing less constraints on scores (for example rating +/- 1)

- Fig6: very demonstrative, perfs are clearly better when constraints are harder

### 5. Related work

- ref 11 : HIN for data mining (Sun et Han, 2012)

- ref 12 : similarity measures in HIN with PathSim (Sun et al. 2012)

- ref 10 : similarity measures in HIN with HeteSim (Shi et al. 2014)

- ref 2 : HIN for RS (Jamali and Lakshmanan, 2013)

- the cold start question, difference depending on the technique used (MF, CF...)

- ref 15 : closest to this paper, HeteRec (Yu et al., 2014) but do not use weighted paths

Comments: